The problem with GPT for the Humanities (and for humanity)

Video recording:

Speaker: Lorella Viola (Luxembourg Centre for Contemporary and Digital History (C2DH ), University of Luxembourg)

Speaker: Lorella Viola (Luxembourg Centre for Contemporary and Digital History (C2DH ), University of Luxembourg)

Title: The problem with GPT for the Humanities (and for humanity)

Time: Wednesday, 2022.05.11, 10:00 a.m. (CET)

Place: fully virtual (contact Dr. Jakub Lengiewicz to register)

Format: 30 min. presentation + 30 min. discussion

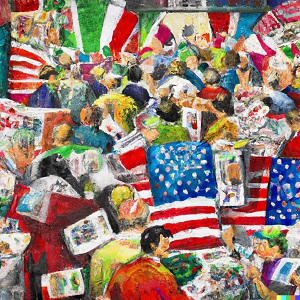

Abstract: GPT models (Generative Pre-trained Transformer) have increasingly become a popular choice by researchers and practitioners. Their success is mostly due to the technology’s ability to move beyond single word predictions. Indeed, unlike in traditional neural network language models, GPT generates text by looking at the entirety of the input text. Thus, rather than determining relevance sequentially by looking at the most recent segment of input, GPT models determine each word’s relevance selectively. However, if on the one hand this ability allows the machine to ‘learn’ faster, the datasets used for training have to be fed as one single document, meaning that all metadata information is inevitably lost (e.g., date, authors, original source). Moreover, as GPT models are trained on crawled, English web material from 2016, these models are not only ignorant of the world prior to this date, but they also express the language as used exclusively by English-speaking users (mostly white, young males). They also expect data pristine in quality, in the sense that these models have been trained on digitally-born material which do not present the typical problems of digitized, historical content (e.g., OCR mistakes, unusual fonts). Although a powerful technology, these issues seriously hinder its application for humanistic enquiry, particularly historical. In this presentation, I discuss these and other problematic aspects of GPT and I present the specific challenges I encountered while working on a historical archive of Italian American immigrant newspapers.