TensAIR: an asynchronous and decentralized framework to distribute artificial neural networks training

Speaker: Mauro Dalle Lucca Tosi (Faculty of Science, Technology and Medicine; University of Luxembourg)

Speaker: Mauro Dalle Lucca Tosi (Faculty of Science, Technology and Medicine; University of Luxembourg)

Title: TensAIR: an asynchronous and decentralized framework to distribute artificial neural networks training

Time: Wednesday, 2021.09.15, 10:00 a.m. (CET)

Place: fully virtual (contact Jakub Lengiewicz to register)

Format: 30 min. presentation + 30 min. discussion

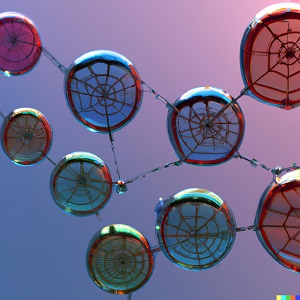

Abstract: In the last decades, artificial neural networks (ANNs) have drawn academy and industry attention for their ability to represent and solve complex problems. ANNs use algorithms based on stochastic gradient descent (SGD) to learn data patterns from training examples, which tends to be time-consuming. Researchers are studying how to distribute this computation across multiple GPUs to reduce training time. Modern implementations rely on synchronously scaling up resources or asynchronously scaling them out using a centralized communication network. However, both of these approaches have communication bottlenecks, which may impair their scaling time. In this research, we create TensAIR, a framework that scales out the training of sparse ANNs models in an asynchronously and decentralized manner. Due to the commutative properties of SGD updates, we linearly scaled out the number of gradients computed per second with minimal impairment on the convergence of the models – relative to the models’ sparseness. These results indicate that TensAIR enables the training of sparse neural networks in significantly less time. We conjecture that this article may inspire further studies on the usage of sparse ANNs on time-sensitive scenarios like online machine learning, which until now would not be considered feasible.